Integrations Overview

Integrations provide the tools for sharing data with other system via file or API. Formats include flat file, JSON, XML and SOAP. Mapping and translation tools are provided such that any existing/legacy file or API can be consumed and applied as needed.

- Data Connections: collection of a remote server's endpoint and credentials

- Imports: retrieve data from external files or APIs

- Exports: send data to external files or APIs

- Import & Export Logs: log of all import/export services data

- Export Queue: in-transit data which is waiting to be exported

- Embedding & Themeing: embedding the application within third party pages

Data Connections

Data Connections provide endpoint mapping and credentials to be re-used across multiple import and export services. The Data Connections mapping includes the following information about and endpoint (SFTP, API, etc):

| Name | Description |

|---|---|

| Connection Type | The type of connection, such as SFTP, API or Email |

| Host URL | The server endpoint, such as https://example.com (top level only) |

| Username/Password | Credentials for the connection; for SFTP this is the username/password established by the SFTP account owner; for APIs this is the |

| Authorization | For API/HTTPS type connections, sets the style of authentication (e.g. Basic Auth, JWT/Bearer Token) |

| Connection Cache | Optionally sets the duration to cache the connection before re-authorizing |

| Paging | Optionally sets the number of records which the server can receive for sending multiple records in one payload |

| Options | Optionally allows for the publishing of additional headers, such as for an API key or other unique identifier |

Once created, the Data Connection will be linked to import and export services in order to re-use and automate connections and authentication. Multiple Data Connections can be created to the same server endpoints as needed if credentials are dependent on the type of API being consumed.

Imports

Imports are used to connect to remote systems and retrieve data via file (SFTP) or API (HTTPS). Generally you'd create one import per file or API you want to consume. Additionally, you could create multiple Imports which consume the same file or API but for different reasons (e.g. different target datasource).

Imports contain the following definitions:

| Item | Description |

|---|---|

| Data Connection & Path | The Data Connection to use for endpoint and authentication, along with the path/filename within the endpoint; this is how multiple Import service can share a Data Connection as the interior path and routing is stored in the Import |

| Source & Filters | After retrieving the raw data, provides parsing instruction (CSV, JSON, XML, Fixed..) and raw filters (e.g. ignore rows containing a certain value) |

| Destination | Choose the target destination (datasource) where the data will be stored, along with the field which will be used for uniqueness (e.g. the employee Number when importing employees). Another option is to define the Import as a Live Import which means the data is never stored, rather fetched live on-demand. |

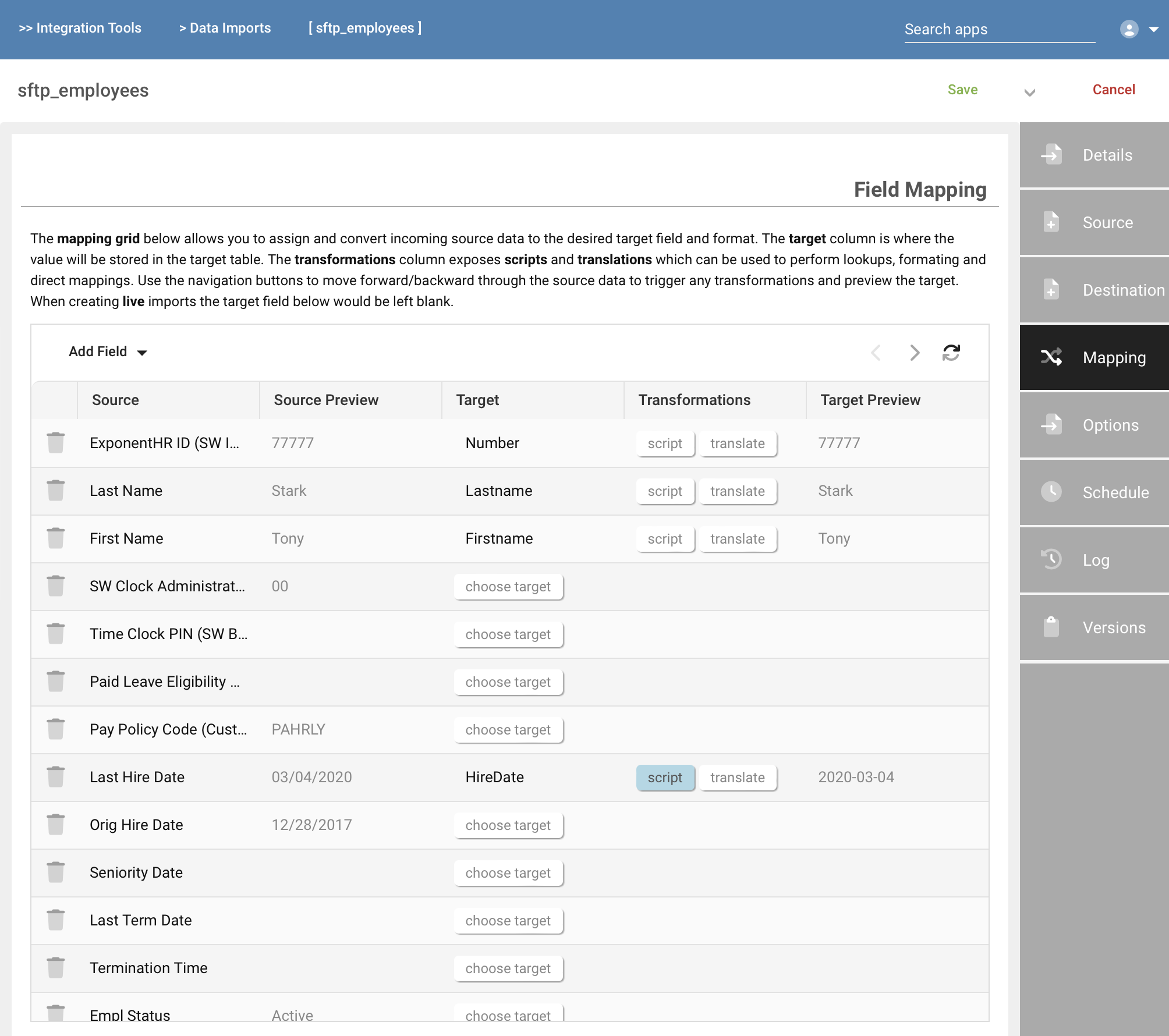

| Mapping | This is the core of the import service, whereby the incoming data values are mapped and translated as needed to fit the target format (e.g. convert date formats, lookup referential data) |

| Options | Optional request parameters which are inserted as headers into the GET requests for API style imports. While an API key might be stored in the Data Connection, an remote filter could be added to the Import Options instructing the API server to filter the selected records. |

| Schedule | Determines when the Import will run, such as nightly, hourly, etc. In addition, any Import can be run on-demand or as part of a workflow. |

| Log | Tracks the results of the Import service over time. To enhance performance, caching is used such that incoming source records which have not changed will be ignored (cached) vs going through the process of mapping and storing. The log also tracks the number of records inserted, updated, filtered (ignored) and total records. |

The screenshot below shows the mapping of incoming data to the target format.

Exports

Exports are used to send data from the platform to remote systems via file (SFTP) or API (HTTPS). Exports are generally used to send live data changes to a remote service. A popular example would be the sending of punches received from a time clock or phone to an external payroll system. Bulk exports are possible via the Data View and Payroll Export apps.

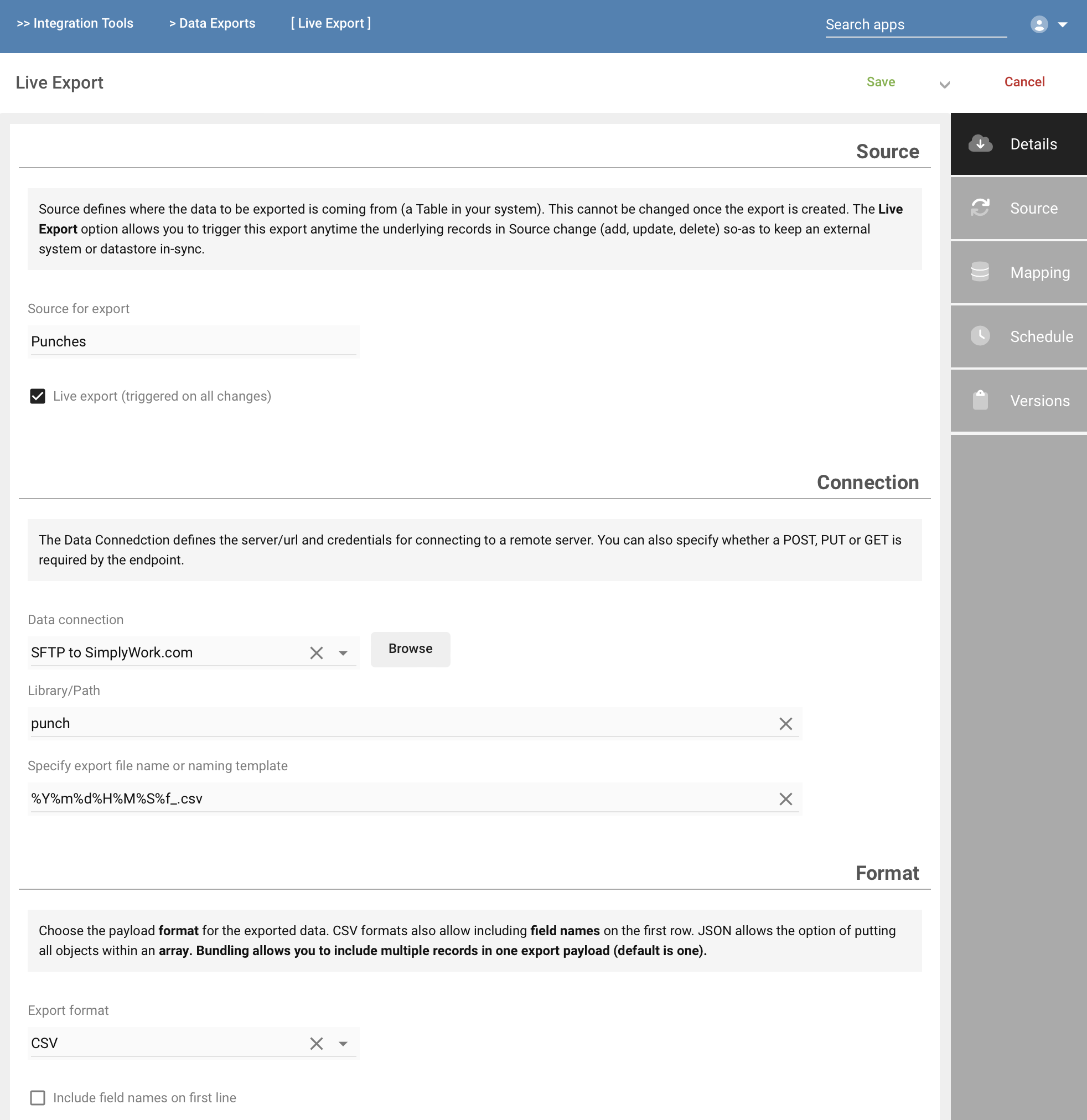

Exports contain the following definitions:

| Item | Description |

|---|---|

| Data Connection | Specify the server connection, API or file path and filename |

| Format | Choose the payload format to send (CSV, XML or JSON) |

| Source Filters | Allows you to filter the source rows to control which records are sent |

| Mapping | Mapping and translation of the data to the target format (e.g. formatting dates, hardcoded content) |

| Schedule | If not setup as a live export, the schedule on which to export the data |

The screenshot below shows the Export details and basic configuration.

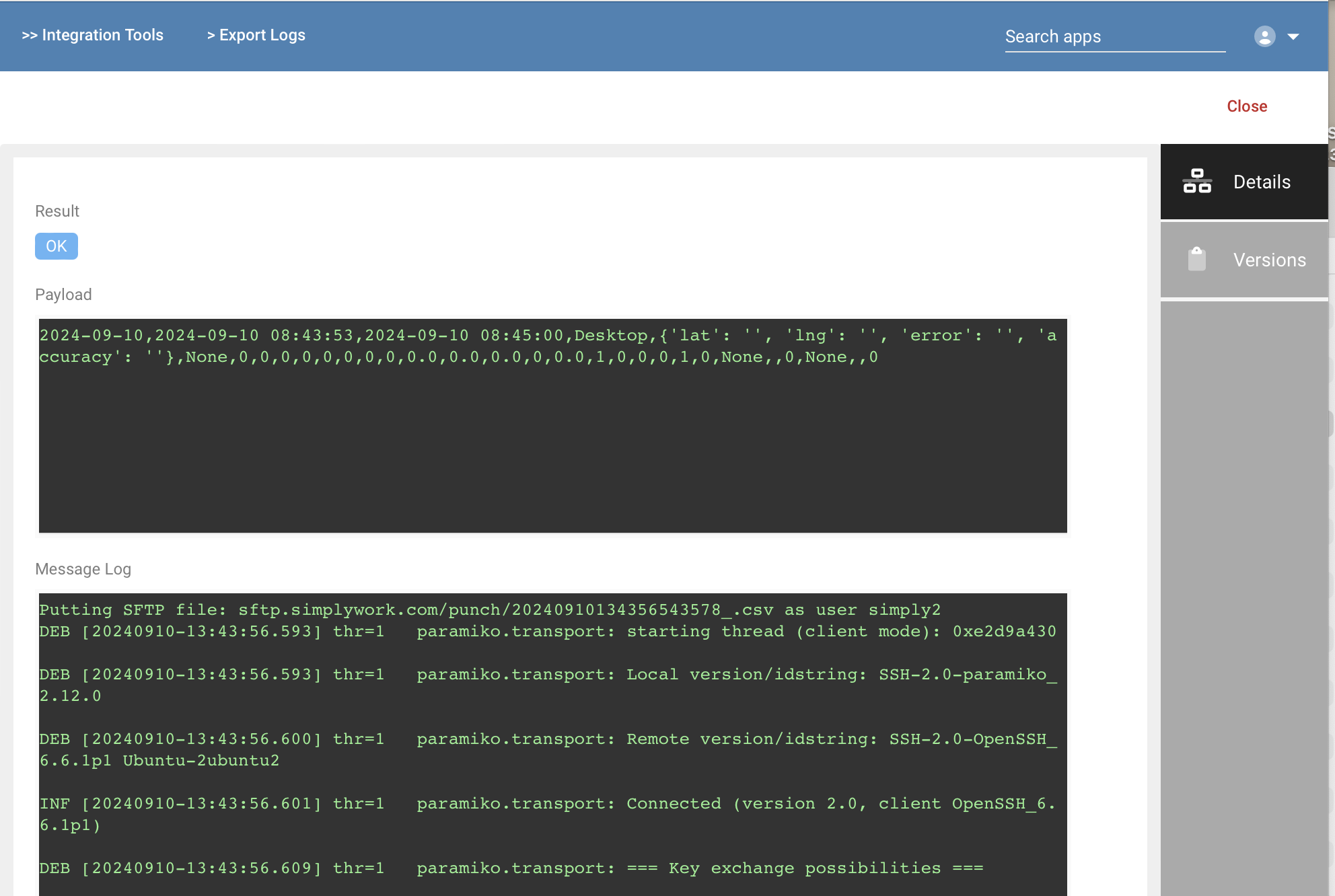

Import & Export Logs

The Import and Export Logs track the result of each data event, including the mapped and translated payload, any error messages and related results. Low level transport details are also tracked in order to uncover credential or certificate issues.

Export Queue

The Export Queue is used as a temporary buffer when the outbound Export service is unable to deliver the payload due to connectivity or server errors. In the event of an error during export, outgoing payloads are saved in the Export Queue in the original sending order. The system will automatically retry entries in the Export Queue every 15 minutes until the records are successfully sent. When more than one payload exists, a bundle of records (as specified in the Export definition) can be transmitted at once in order to catch-up. This will prevent thrashing the remote server/API, while preserving the order and automatically recovering from errors.

Embedding & Themeing

In addition to data integration via file and API, another aspect of the integration process includes embedding and styling. Embedding allows third party applications to embed the UX directly into their platform, along with stylizing and theming.

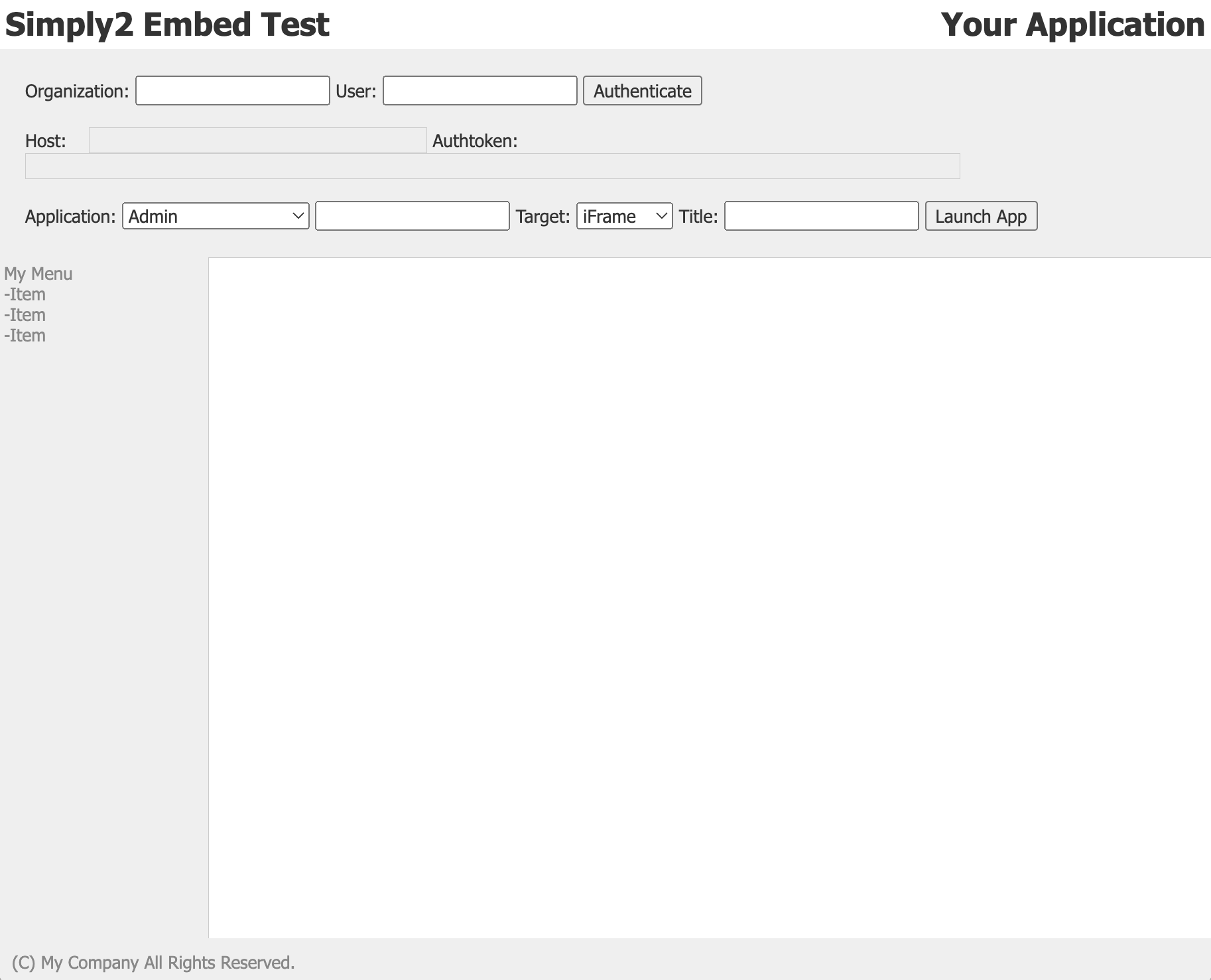

Embedding

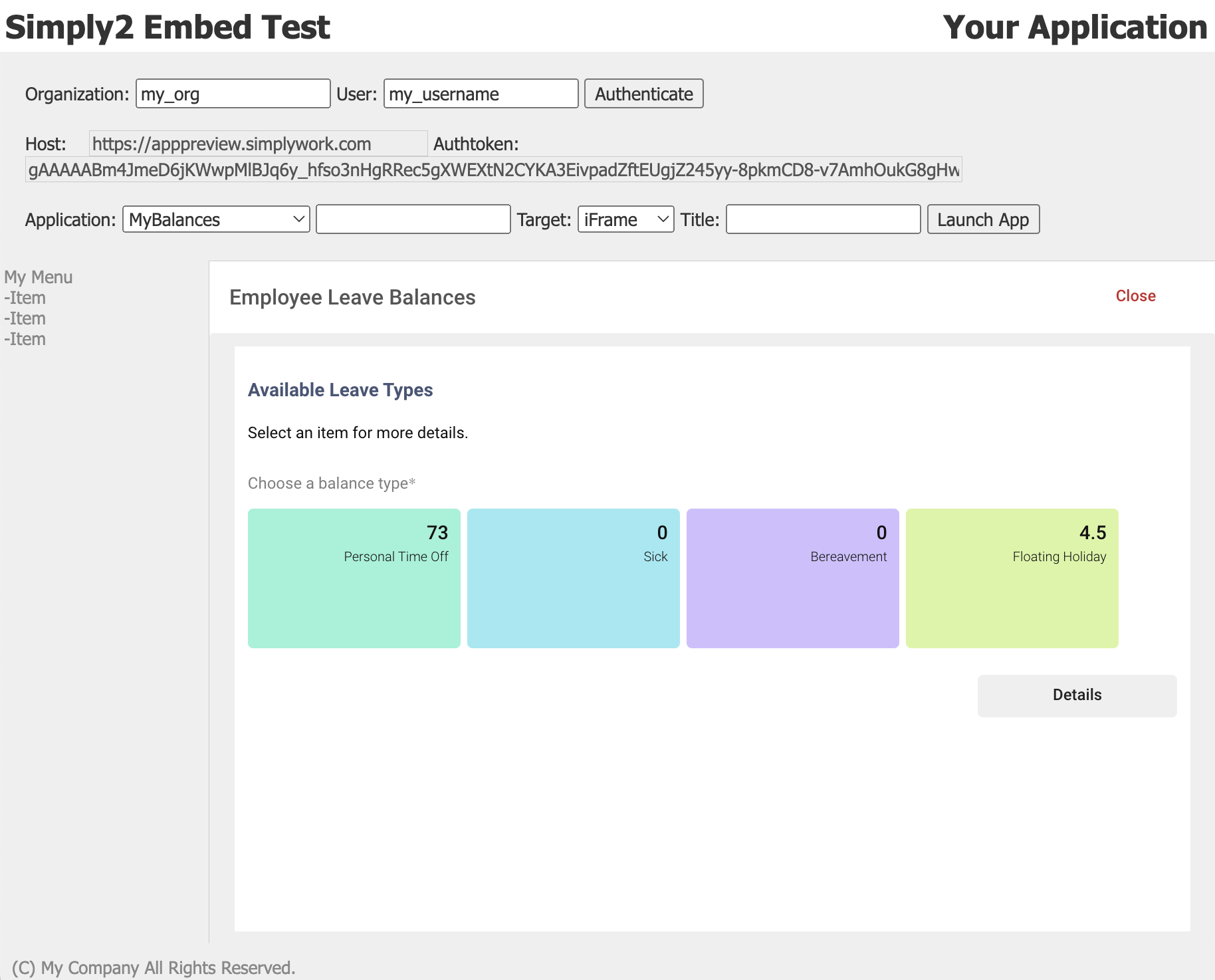

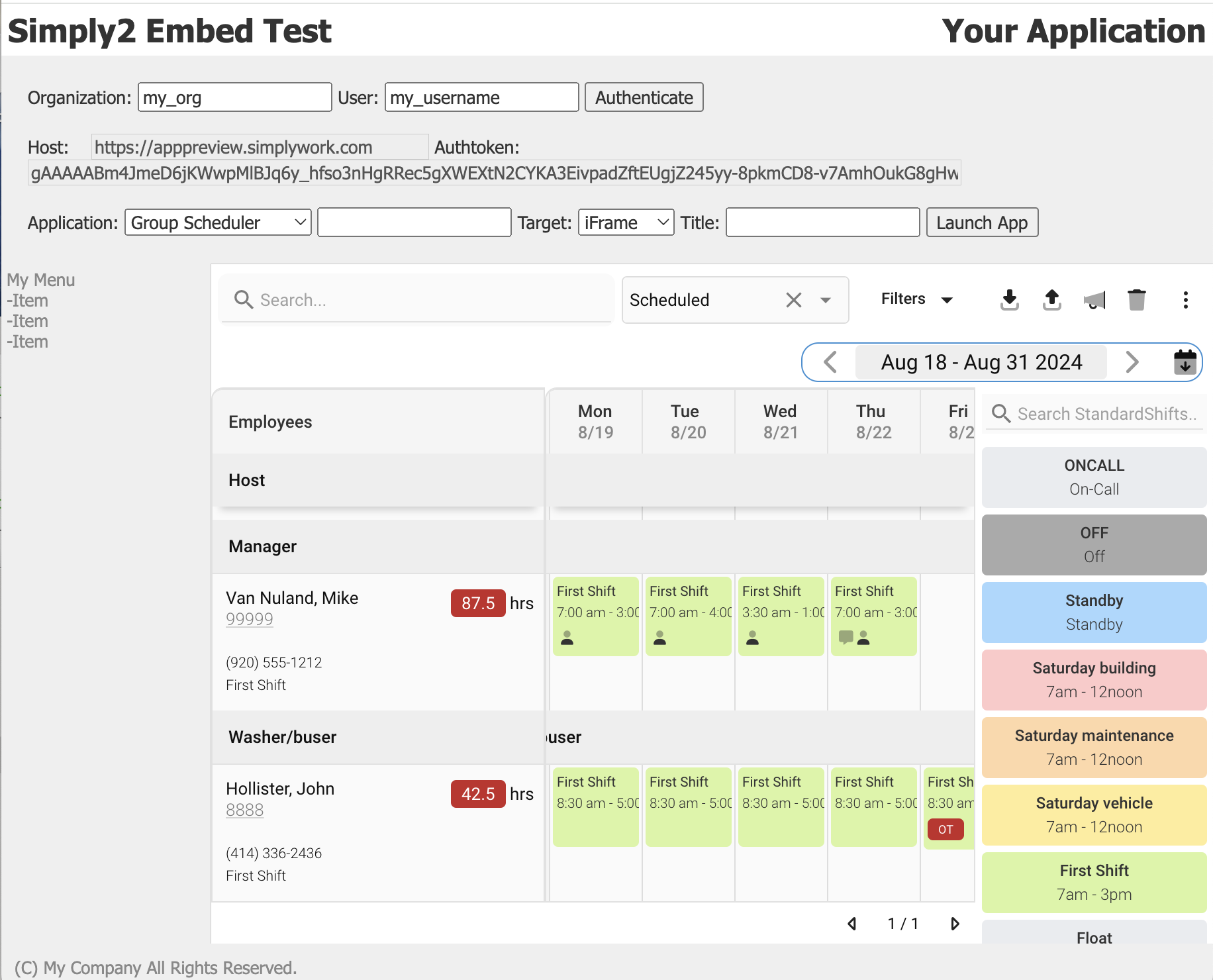

A sample app demonstrates how to embed the platform UX within a third party application or pages. Embedding can be performed within an iFrame on an existing page, or by launching a new browser tab. Authentication is via SSO and/or Bearer Token.

When embedding or launching a new tab, the application's menu and navigation can be hidden so-as to create a fully integrated appearance within the third party application. When launching a new tab, the tab caption can be specified along with optionally hiding the menu and navigation components.

The screenshot below shows a sample app with an embedded iFrame ready to launch. The first step is to authenticate, which will return the authorization token.

After authenticating, the third-party app would select an application to run and the target container (iFrame or tab). In the screenshot below, we have chosen to run the Employee Balances app within the iFram on the current page:

The screenshot below demonstrates running the Group Scheduler app within the iFrame component on the third-party application:

Themeing

Themeing inclues the ability to set the following attributes in your platform instance:

| Item | Description |

|---|---|

| Tab icon | The icon displayed in the browser tab (normally set via the page meta data) |

| Tab caption | The caption displayed in the browser tab |

| Logo | The logo displayed on the top of the navigation menus |

| Primary colors | The color of the primary attributes of the UX, such as the menu background, buttons and key display elements |

| CSS | Any other CSS overrides, such as fonts, overall sizes, padding, spacing or other lower level styling |